The Ethics of AI in Personal Memories

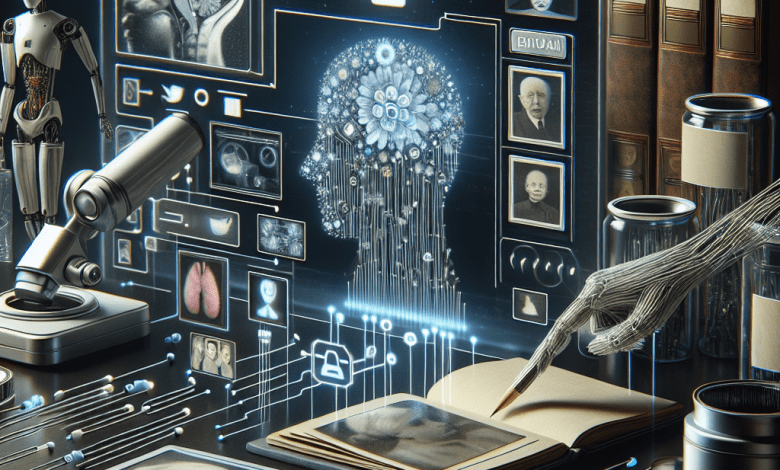

The Ethics of AI in Personal Memories: Navigating Digital Immortality

Let’s talk about something that’s both exciting and a little unsettling: the rise of artificial intelligence in our personal memories. Imagine a world where your favorite moments aren’t just stored in photos or videos but are enhanced or even recreated by AI. Fascinating, right? But here’s the thing: as we edge closer to what people are calling “digital immortality”, some big ethical questions come up. Questions about privacy, consent, and what it really means to remember.

AI and Memories: What’s the Deal?

Here are some ways AI is already changing how we think about memories:

- Memory-Boosting Tools: Ever wanted to relive your favorite holiday or that hilarious college story? AI tools like Replika and projects like Neuralink are designed to help. They can boost memory recall and even simulate conversations. Think of them as a digital assistant for your best moments.

- Virtual Loved Ones: Companies like HereAfter AI are taking it further, creating digital avatars of loved ones. Using recorded interviews and AI, these avatars can “talk” with you even after someone is gone. Comforting? Maybe. But it raises questions about where to draw the line.

- Deepfake Resurrections: Then there’s deepfake technology, which can create eerily lifelike videos of people who’ve passed away. Remember when Kanye West gifted Kim Kardashian a deepfake of her late father? It’s powerful tech, but it blurs the boundary between preserving memories and manipulating them.

The Big Ethical Questions

With all this innovation, some tough ethical challenges come with it. Here’s what we need to think about:

- Consent: How do you get someone’s permission to recreate their digital likeness after they’re gone? You can’t. And that’s a problem.

- Privacy Risks: AI relies on data—lots of it. But how do we ensure that personal, often sensitive, information stays safe? What happens if it’s misused?

- Authenticity: As AI-generated recreations become more convincing, it’s harder to tell what’s real. Could this change how we view history—or even how we process emotions?

- Emotional Impact: Digital avatars can be comforting, but they might also complicate grief. Could these AI companions create dependencies that make it harder to move on?

Are the Rules Keeping Up?

The short answer? Not really. Here’s where things stand:

- GDPR: Europe’s data protection laws are solid on privacy but don’t address digital rights after death.

- US Policies: In the U.S., it’s a patchwork. Each state has its own approach, and there’s no unified framework.

- Industry Guidelines: Companies like OpenAI promote ethical practices, but these are voluntary and lack enforcement power.

Balancing the Good with the Risks

AI has huge potential to reshape how we preserve memories. It could help people with dementia reconnect with their past or let families share their stories with future generations. But we’ve got to proceed carefully:

- Transparent Policies: We need clear rules about who owns this data and how it can be used.

- Public Awareness: People should understand the risks and rewards before diving into AI-powered memory tools.

- Ethical Design: Developers need to think about these challenges from the start to avoid problems down the line.

Looking Ahead

AI will keep changing how we remember, and the ethical questions will only grow more complex. The challenge? Finding the right balance. This isn’t just about pushing tech boundaries; it’s about protecting what makes us human. Let’s make sure we move forward thoughtfully, with values intact.

Stay Ahead in AI

Get the latest AI news, insights, and trends delivered to your inbox every week.